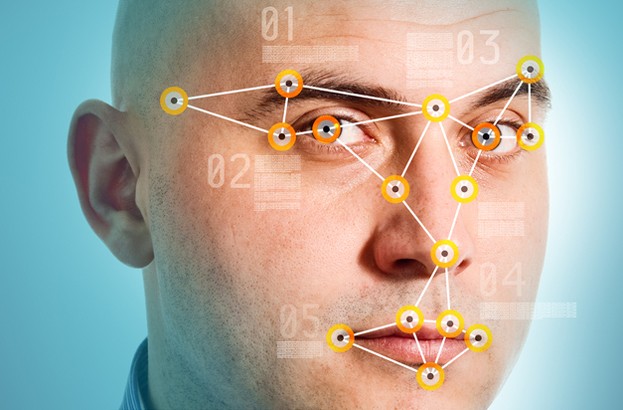

Researchers at the Massachusetts Institute of Technology (MIT) have unveiled new research where it has developed a computational model aimed at capturing and mimicking the human aspects of facial recognition through artificial intelligence (AI) and machine learning systems, according to a report by ZDNet.

The researchers, which hail from the MIT-headquartered Center for Brains, Minds, and Machines (CBMM), created a machine learning system which implements the new model.

The team trained the system to recognize sets of certain faces based on sample images, which helped to facilitate a more accurate and ‘human’ method of recognizing faces.

An additional facial recognition processing step happens when an image of a face is rotated.

The property, which occurred during the training process but was not part of the initial process,

results in the model duplicating “an experimentally observed feature of the primate face-processing mechanism,” the team said.

Based on these findings, the researchers believe the artificial model and the brain are ‘thinking’ in a similar way.

“This is not a proof that we understand what’s going on,” says Tomaso Poggio, a professor of brain and cognitive sciences at MIT and director of the CBBM. “Models are kind of cartoons of reality, especially in biology. So I would be surprised if things turn out to be this simple. But I think it’s strong evidence that we are on the right track.”

The researchers have published their findings in a new paper, described in the journal Computational Biology, which also includes a mathematical proof of the model.

The system is seen as a neural network as it tries to duplicate the human brain’s structure and includes units which are organized into layers and connecting to ‘nodes’ which process information.

As the data is entered into the network and grouped into separate facial recognition criteria, some of the nodes respond to different stimuli.

By categorizing which nodes saw the strongest responses to certain criteria, the researchers were able to generate more accurate recognition of faces. Additionally, as nodes ‘fired’ in different ways, the “spontaneous” step also emerged.

Although the research is still in its moderate stages, it could help create a better understanding of the mind, as well as help scientists to improve machine learning algorithms and artificial intelligence in facial recognition technologies.

“I think it’s a significant step forward,” says Christof Koch, president and chief scientific officer at the Allen Institute for Brain Science. “In this day and age, when everything is dominated by either big data or huge computer simulations, this shows you how a principled understanding of learning can explain some puzzling findings.

“They’re only looking at the feed-forward pathway — in other words, the first 80, 100 milliseconds. The monkey opens its eyes, and within 80 to 100 milliseconds, it can recognize a face and push a button signaling that. The question is what goes on in those 80 to 100 milliseconds, and the model that they have seems to explain that quite well.”